Installing a personal version of Gromacs¶

A very popular application on Apocrita is Gromacs, but we only provide modules for fairly simple compilation variants. Some more advanced users may want to compile additional personal versions with more granular compilation options.

Searching for available variants¶

So, first of all, let's see what is on offer by default. This time, along with

the -x (explicitly installed) and -p (installation prefix) flags we used

previously for nano above, we will also add in the -v (variant) flag:

spack find output

$ spack -C ${HOME}/spack-config-templates/0.23.1 find -x -p -v gromacs

-- linux-rocky9-x86_64_v4 / gcc@12.2.0 --------------------------

gromacs@2024.1~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only~mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-yekd62f-serial

gromacs@2024.1~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-openmpi-5.0.3-55bdvpa-mpi

gromacs@2024.1~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-openmpi-5.0.3-tzp3elt-mpi

gromacs@2024.3~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~nvshmem~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.3-openmpi-5.0.3-4tmxysw-mpi

-- linux-rocky9-x86_64_v4 / gcc@14.2.0 --------------------------

gromacs@2024.3~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only~mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2024.3-uhqvqaj-serial

gromacs@2024.3~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2024.3-openmpi-5.0.5-q4j2vts-mpi

==> 6 installed packages

As you can see, the output of the spack find command includes an incredibly

long list of variants, either marked with ~ (false) or + (true). You can see

a list of all possible variants for a particular Spack package in two ways.

Spack Packages website¶

Package information is for the develop branch

The Spack Packages website lists information such as versions available,

variants etc. from the

develop branch of Spack.

Some versions and variants may not be available in the specific Spack

release you are using.

The Spack Packages website is an online catalogue of all available packages in Spack. The page for Gromacs can be found here:

https://packages.spack.io/package.html?name=gromacs

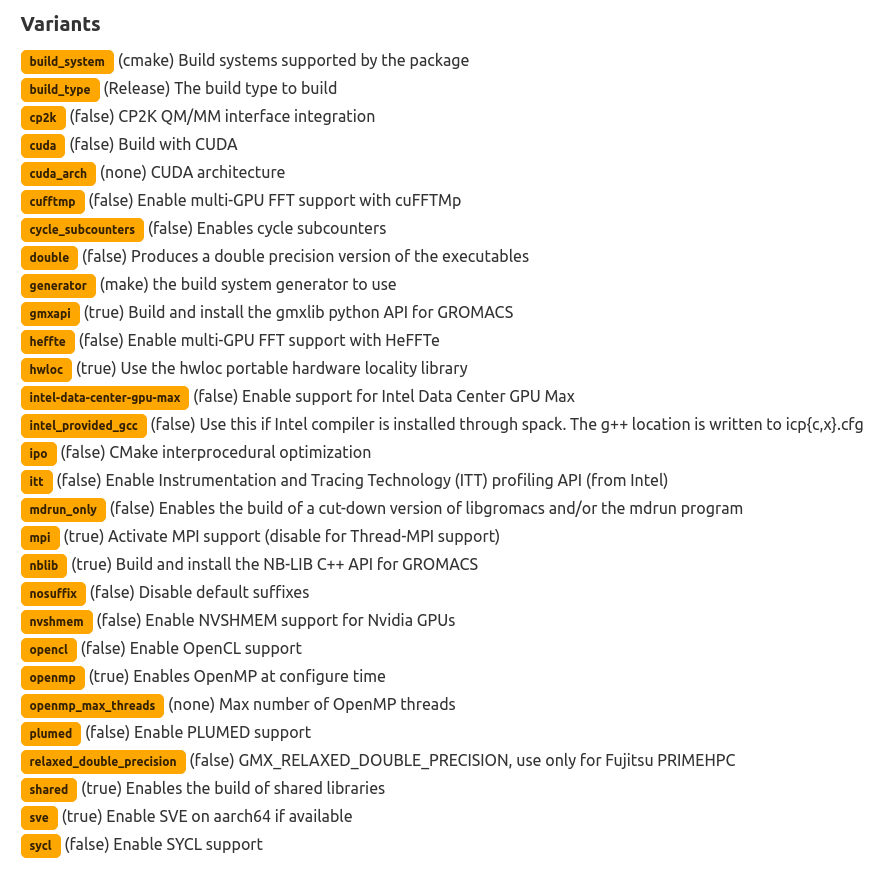

You'll see available versions, dependencies, maintainers and various other information, but for the purposes of this tutorial, the most important section is "Variants":

There is quite a long list, and the defaults (true) or (false) are marked in

brackets after each item in the list. For the versions centrally compiled, we

have largely stuck to the defaults.

The spack info command¶

Another way to check which variants are available is to use the spack info

command:

spack info output (click to expand)

$ spack -C ${HOME}/spack-config-templates/0.23.1 info gromacs

CMakePackage: gromacs

Description:

GROMACS is a molecular dynamics package primarily designed for

simulations of proteins, lipids and nucleic acids. It was originally

developed in the Biophysical Chemistry department of University of

Groningen, and is now maintained by contributors in universities and

research centers across the world. GROMACS is one of the fastest and

most popular software packages available and can run on CPUs as well as

GPUs. It is free, open source released under the GNU Lesser General

Public License. Before the version 4.6, GROMACS was released under the

GNU General Public License.

Homepage: https://www.gromacs.org

Preferred version:

2024.3 https://ftp.gromacs.org/gromacs/gromacs-2024.3.tar.gz

Safe versions:

main [git] https://gitlab.com/gromacs/gromacs.git on branch main

2024.3 https://ftp.gromacs.org/gromacs/gromacs-2024.3.tar.gz

(etc.)

Deprecated versions:

master [git] https://gitlab.com/gromacs/gromacs.git on branch main

Variants:

build_system [cmake] cmake

Build systems supported by the package

build_type [Release] Debug, MinSizeRel, Profile, Reference, RelWithAssert, RelWithDebInfo, Release

The build type to build

cp2k [false] false, true

CP2K QM/MM interface integration

cuda [false] false, true

Build with CUDA

cycle_subcounters [false] false, true

Enables cycle subcounters

double [false] false, true

Produces a double precision version of the executables

hwloc [true] false, true

Use the hwloc portable hardware locality library

intel_provided_gcc [false] false, true

Use this if Intel compiler is installed through spack.The g++ location is written to icp{c,x}.cfg

mdrun_only [false] false, true

Enables the build of a cut-down version of libgromacs and/or the mdrun program

mpi [true] false, true

Activate MPI support (disable for Thread-MPI support)

nosuffix [false] false, true

Disable default suffixes

opencl [false] false, true

Enable OpenCL support

openmp [true] false, true

Enables OpenMP at configure time

openmp_max_threads [none] none

Max number of OpenMP threads

relaxed_double_precision [false] false, true

GMX_RELAXED_DOUBLE_PRECISION, use only for Fujitsu PRIMEHPC

shared [true] false, true

Enables the build of shared libraries

when +cuda

cuda_arch [none] none, 10, 11, 12, 13, 20, 21, 30, 32, 35, 37, 50, 52, 53, 60, 61, 62, 70, 72, 75, 80, 86, 87, 89, 90, 90a

CUDA architecture

when build_system=cmake

generator [make] none

the build system generator to use

when build_system=cmake ^cmake@3.9:

ipo [false] false, true

CMake interprocedural optimization

when @2022:+cuda+mpi

cufftmp [false] false, true

Enable multi-GPU FFT support with cuFFTMp

when @2021:+mpi+sycl

heffte [false] false, true

Enable multi-GPU FFT support with HeFFTe

when @2021:%clang

sycl [false] false, true

Enable SYCL support

when @2022:+sycl

intel-data-center-gpu-max [false] false, true

Enable support for Intel Data Center GPU Max

when @2021:

nblib [true] false, true

Build and install the NB-LIB C++ API for GROMACS

when @2019:

gmxapi [true] false, true

Build and install the gmxlib python API for GROMACS

when @2024:+cuda+mpi

nvshmem [false] false, true

Enable NVSHMEM support for Nvidia GPUs

when arch=None-None-neoverse_v1:,neoverse_v2:,neoverse_n2:

sve [true] false, true

Enable SVE on aarch64 if available

when arch=None-None-a64fx

sve [true] false, true

Enable SVE on aarch64 if available

when @2016.5:2016.6,2018.4,2018.6,2018.8,2019.2,2019.4,2019.6,2020.2,2020.4:2020.7,=2021,2021.4:2021.7,2022.3,2022.5,=2023

plumed [false] false, true

Enable PLUMED support

Build Dependencies:

blas cmake cp2k cuda fftw-api gcc gmake heffte hwloc lapack mpi ninja nvhpc pkgconfig plumed sycl

Link Dependencies:

blas cp2k cuda fftw-api gcc heffte hwloc lapack mpi nvhpc plumed sycl

Run Dependencies:

None

Licenses:

GPL-2.0-or-later@:4.5

LGPL-2.1-or-later@4.6

Crucially, unlike the Spack Packages website, the output of spack info will

be specific to the version of Spack in use. However, users may find the Spack

Packages website easier to read.

Installing specific variants¶

Enable PLUMED support¶

PLUMED support is only available in version 2023 and earlier

As you can see from the output of spack info above, PLUMED support is only

supported by Gromacs 2023 and earlier.

Add a suffix for your private PLUMED module

By default, the fact that PLUMED support has been added won't be reflected in your private module name. We recommend adding:

gromacs:

suffixes:

+plumed: plumed

to the tcl: section of your personal

modules.yaml configuration file to help

to differentiate. It is already added to the template modules.yaml file in

the spack-config-template repository.

One popular compilation variant users often request for Gromacs is PLUMED

support. As we saw above, the default for the plumed variant is (false)

(which in the context of Spack commands and output becomes ~).

Always run a spec command before install!

You should always check what is about to be installed using the spec

command as detailed below and then move onto the install command.

Let's see if we can

spec

a personal installation of Gromacs adding in PLUMED support. Note that we have

added ^openmpi ^fftw+openmp to the commands below; this means that we should

compile against Open MPI (as opposed to, say,

Intel MPI) and an

existing +openmp variant of fftw which avoids compiling a new version of

fftw and instead uses the one in upstream:

spack spec output (click to expand)

$ spack -C ${HOME}/spack-config-templates/0.23.1 spec gromacs +plumed ^openmpi ^fftw+openmp

- gromacs@2023%gcc@14.2.0~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp+plumed~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none arch=linux-rocky9-x86_64_v4

[^] ^cmake@3.27.9%gcc@12.2.0~doc+ncurses+ownlibs build_system=generic build_type=Release arch=linux-rocky9-x86_64_v4

[^] ^curl@8.7.1%gcc@12.2.0~gssapi~ldap~libidn2~librtmp~libssh~libssh2+nghttp2 build_system=autotools libs=shared,static tls=openssl arch=linux-rocky9-x86_64_v4

[^] ^nghttp2@1.57.0%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^openssl@3.3.0%gcc@12.2.0~docs+shared build_system=generic certs=mozilla arch=linux-rocky9-x86_64_v4

[^] ^ca-certificates-mozilla@2023-05-30%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^gcc-runtime@12.2.0%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[e] ^glibc@2.34%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^ncurses@6.5%gcc@11.4.1~symlinks+termlib abi=none build_system=autotools patches=7a351bc arch=linux-rocky9-x86_64_v4

[^] ^zlib-ng@2.1.6%gcc@11.4.1+compat+new_strategies+opt+pic+shared build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^fftw@3.3.10%gcc@14.2.0+mpi+openmp~pfft_patches+shared build_system=autotools patches=872cff9 precision=double,float arch=linux-rocky9-x86_64_v4

[^] ^gcc-runtime@14.2.0%gcc@14.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[e] ^glibc@2.34%gcc@14.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^gmake@4.4.1%gcc@11.4.1~guile build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^gcc-runtime@11.4.1%gcc@11.4.1 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^hwloc@2.9.1%gcc@12.2.0~cairo~cuda~gl~libudev+libxml2~netloc~nvml~oneapi-level-zero~opencl+pci~rocm build_system=autotools libs=shared,static arch=linux-rocky9-x86_64_v4

[^] ^libpciaccess@0.17%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^util-macros@1.19.3%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libxml2@2.10.3%gcc@11.4.1+pic~python+shared build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^xz@5.4.6%gcc@11.4.1~pic build_system=autotools libs=shared,static arch=linux-rocky9-x86_64_v4

[^] ^openblas@0.3.26%gcc@12.2.0~bignuma~consistent_fpcsr+dynamic_dispatch+fortran~ilp64+locking+pic+shared build_system=makefile symbol_suffix=none threads=none arch=linux-rocky9-x86_64_v4

[^] ^perl@5.38.0%gcc@11.4.1+cpanm+opcode+open+shared+threads build_system=generic patches=714e4d1 arch=linux-rocky9-x86_64_v4

[^] ^berkeley-db@18.1.40%gcc@11.4.1+cxx~docs+stl build_system=autotools patches=26090f4,b231fcc arch=linux-rocky9-x86_64_v4

[^] ^bzip2@1.0.8%gcc@11.4.1~debug~pic+shared build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^gdbm@1.23%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^readline@8.2%gcc@11.4.1 build_system=autotools patches=bbf97f1 arch=linux-rocky9-x86_64_v4

[e] ^openmpi@5.0.5%gcc@14.2.0+atomics~cuda~debug+gpfs~internal-hwloc~internal-libevent~internal-pmix~java~lustre~memchecker~openshmem~romio+rsh~static~two_level_namespace+vt+wrapper-rpath build_system=autotools fabrics=none romio-filesystem=none schedulers=none arch=linux-rocky9-x86_64_v4

[^] ^pkgconf@2.2.0%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

- ^plumed@2.9.1%gcc@14.2.0+gsl+mpi+shared arrayfire=none build_system=autotools optional_modules=all arch=linux-rocky9-x86_64_v4

[^] ^autoconf@2.72%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^automake@1.16.5%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^gsl@2.7.1%gcc@12.2.0~external-cblas+pic+shared build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libtool@2.4.7%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^findutils@4.9.0%gcc@11.4.1 build_system=autotools patches=440b954 arch=linux-rocky9-x86_64_v4

[^] ^m4@1.4.19%gcc@11.4.1+sigsegv build_system=autotools patches=9dc5fbd,bfdffa7 arch=linux-rocky9-x86_64_v4

[^] ^diffutils@3.10%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libsigsegv@2.14%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^py-cython@3.0.8%gcc@12.2.0 build_system=python_pip arch=linux-rocky9-x86_64_v4

[^] ^py-pip@23.1.2%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^py-setuptools@69.2.0%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^py-wheel@0.41.2%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^python@3.11.7%gcc@12.2.0+bz2+crypt+ctypes+dbm~debug+libxml2+lzma~nis~optimizations+pic+pyexpat+pythoncmd+readline+shared+sqlite3+ssl~tkinter+uuid+zlib build_system=generic patches=13fa8bf,b0615b2,ebdca64,f2fd060 arch=linux-rocky9-x86_64_v4

[^] ^expat@2.6.2%gcc@12.2.0+libbsd build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libbsd@0.12.1%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libmd@1.0.4%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^gettext@0.22.5%gcc@11.4.1+bzip2+curses+git~libunistring+libxml2+pic+shared+tar+xz build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^tar@1.34%gcc@11.4.1 build_system=autotools zip=pigz arch=linux-rocky9-x86_64_v4

[^] ^pigz@2.8%gcc@11.4.1 build_system=makefile arch=linux-rocky9-x86_64_v4

[^] ^zstd@1.5.6%gcc@11.4.1+programs build_system=makefile compression=none libs=shared,static arch=linux-rocky9-x86_64_v4

[^] ^libffi@3.4.6%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libxcrypt@4.4.35%gcc@12.2.0~obsolete_api build_system=autotools patches=4885da3 arch=linux-rocky9-x86_64_v4

[^] ^sqlite@3.43.2%gcc@12.2.0+column_metadata+dynamic_extensions+fts~functions+rtree build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^util-linux-uuid@2.38.1%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^python-venv@1.0%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

So, a lot. To recap the symbols in the above output:

-means that the listed application/library is not installed anywhere at all, neither in upstream or personally[^]means that the listed application/library is already installed in upstream and will be used to avoid re-installation[e]means that the listed application/library is marked as external.glibcis marked as external as before, as is Open MPI (as explained in thepackages.yamldocumentation).

As we can see, everything required to compile Gromacs is already available in

our central upstream ([^]) apart from plumed. This plumed will be compiled

as part of an install and used during the compilation of a new version of

Gromacs itself with PLUMED support.

You should notice that the variant list for gromacs contains +plumed as

well. So, as before, let's go ahead and install our personal variant:

spack install output (click to expand)

$ spack -C ${HOME}/spack-config-templates/0.23.1 install -j ${NSLOTS} gromacs +plumed ^openmpi ^fftw+openmp

[+] /usr (external glibc-2.34-xri56vcyzs7kkvakhoku3fefc46nw25y)

[+] /usr (external glibc-2.34-vjgsh5eoloariofarhttteo73mj5rgql)

[+] /share/apps/rocky9/general/libs/openmpi/gcc/14.2.0/5.0.5 (external openmpi-5.0.5-ccatyv2dkfmw7f433zsigue7ffe2mrfi)

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gcc-runtime/12.2.0-w77gg5r

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gcc-runtime/11.4.1-llid4hw

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gcc-runtime/14.2.0-4w7sesu

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libpciaccess/0.17-tpopwgn

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libmd/1.0.4-y6ppafl

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libffi/3.4.6-yc4oqfz

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gsl/2.7.1-ynxvfrc

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/util-linux-uuid/2.38.1-nkfrifq

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libxcrypt/4.4.35-4mszlpj

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/openblas/0.3.26-nt553wl

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/nghttp2/1.57.0-4ntqcoo

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/findutils/4.9.0-62lolye

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gmake/4.4.1-xchit5a

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/libtool/2.4.7-c43op4r

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/zstd/1.5.6-my7tyw6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/pkgconf/2.2.0-7wg26bz

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/bzip2/1.0.8-uj4wyhx

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/fftw/3.3.10-openmpi-5.0.5-hdrbd72

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/xz/5.4.6-rwn7pno

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/zlib-ng/2.1.6-g2yruc3

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/libsigsegv/2.14-m2gvtuy

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/berkeley-db/18.1.40-cogxxtx

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libbsd/0.12.1-zb23l3j

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/ncurses/6.5-4n2uzha

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/openssl/3.3.0-filwsx6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/pigz/2.8-somkvv4

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/libxml2/2.10.3-q6zmsq6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/m4/1.4.19-fdljdyg

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/expat/2.6.2-je4ggnn

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/readline/8.2-ea7drzj

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/curl/8.7.1-v4za2y6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/tar/1.34-ivzmnos

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/hwloc/2.9.1-zqnfdoq

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gdbm/1.23-bx77xc6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/sqlite/3.43.2-t5xgpdh

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/cmake/3.27.9-o3vtj26

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gettext/0.22.5-udcuonu

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/perl/5.38.0-eyk53wh

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/python/3.11.7-rj3pox3

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/autoconf/2.72-h32ralr

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/python-venv/1.0-s6divkp

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/automake/1.16.5-y5sqj65

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/py-setuptools/69.2.0-udom3th

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/py-cython/3.0.8-zcf7xi7

==> Installing plumed-2.9.1-bteacu6ijezqiwv5lu4zeregufxcfem7 [48/49]

==> No binary for plumed-2.9.1-bteacu6ijezqiwv5lu4zeregufxcfem7 found: installing from source

==> Fetching https://mirror.spack.io/_source-cache/archive/e2/e24563ad1eb657611918e0c978d9c5212340f128b4f1aa5efbd439a0b2e91b58.tar.gz

==> Ran patch() for plumed

==> plumed: Executing phase: 'autoreconf'

==> plumed: Executing phase: 'configure'

==> plumed: Executing phase: 'build'

==> plumed: Executing phase: 'install'

==> plumed: Successfully installed plumed-2.9.1-bteacu6ijezqiwv5lu4zeregufxcfem7

Stage: 7.72s. Autoreconf: 4.45s. Configure: 25.38s. Build: 17m 6.37s. Install: 44.53s. Post-install: 1.65s. Total: 18m 34.15s

[+] /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/plumed/2.9.1-openmpi-5.0.5-bteacu6

==> Installing gromacs-2023-2wld7jfmw5huklydxwwfgq3flgd67x4i [49/49]

==> No binary for gromacs-2023-2wld7jfmw5huklydxwwfgq3flgd67x4i found: installing from source

==> Fetching https://mirror.spack.io/_source-cache/archive/ac/ac92c6da72fbbcca414fd8a8d979e56ecf17c4c1cdabed2da5cfb4e7277b7ba8.tar.gz

NOTE: shell only version, useful when plumed is cross compiled

NOTE: shell only version, useful when plumed is cross compiled

PLUMED patching tool

MD engine: gromacs-2023

PLUMED location: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/plumed/2.9.1-openmpi-5.0.5-bteacu6/lib/plumed

diff file: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/plumed/2.9.1-openmpi-5.0.5-bteacu6/lib/plumed/patches/gromacs-2023.diff

sourcing config file: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/plumed/2.9.1-openmpi-5.0.5-bteacu6/lib/plumed/patches/gromacs-2023.config

Executing plumed_before_patch function

PLUMED can be incorporated into gromacs using the standard patching procedure.

Patching must be done in the gromacs root directory _before_ the cmake command is invoked.

On clusters you may want to patch gromacs using the static version of plumed, in this case

building gromacs can result in multiple errors. One possible solution is to configure gromacs

with these additional options:

cmake -DBUILD_SHARED_LIBS=OFF -DGMX_PREFER_STATIC_LIBS=ON

To enable PLUMED in a gromacs simulation one should use

mdrun with an extra -plumed flag. The flag can be used to

specify the name of the PLUMED input file, e.g.:

gmx mdrun -plumed plumed.dat

For more information on gromacs you should visit http://www.gromacs.org

Linking Plumed.h, Plumed.inc, and Plumed.cmake (shared mode)

Patching with on-the-fly diff from stored originals

patching file ./cmake/gmxVersionInfo.cmake

Hunk #1 FAILED at 257.

1 out of 1 hunk FAILED -- saving rejects to file ./cmake/gmxVersionInfo.cmake.rej

patching file ./src/gromacs/CMakeLists.txt

patching file ./src/gromacs/mdlib/expanded.cpp

patching file ./src/gromacs/mdlib/expanded.h

patching file ./src/gromacs/mdlib/sim_util.cpp

patching file ./src/gromacs/mdrun/legacymdrunoptions.cpp

patching file ./src/gromacs/mdrun/legacymdrunoptions.h

patching file ./src/gromacs/mdrun/md.cpp

patching file ./src/gromacs/mdrun/minimize.cpp

patching file ./src/gromacs/mdrun/replicaexchange.cpp

patching file ./src/gromacs/mdrun/replicaexchange.h

patching file ./src/gromacs/mdrun/rerun.cpp

patching file ./src/gromacs/mdrun/runner.cpp

patching file ./src/gromacs/modularsimulator/expandedensembleelement.cpp

patching file ./src/gromacs/taskassignment/decidegpuusage.cpp

patching file ./src/gromacs/taskassignment/include/gromacs/taskassignment/decidegpuusage.h

PLUMED is compiled with MPI support so you can configure gromacs-2023 with MPI

==> Ran patch() for gromacs

==> gromacs: Executing phase: 'cmake'

==> gromacs: Executing phase: 'build'

==> gromacs: Executing phase: 'install'

==> gromacs: Successfully installed gromacs-2023-2wld7jfmw5huklydxwwfgq3flgd67x4i

Stage: 3.64s. Cmake: 33.20s. Build: 1m 45.51s. Install: 3.03s. Post-install: 1.20s. Total: 2m 29.50s

[+] /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2023-openmpi-5.0.5-2wld7jf

To break down what has happened above:

- The existing available upstream dependencies have been used from the central location as opposed to being re-installed personally

- Spack has noticed that

plumedandgromacs-2023are missing and has thus pulled source code tarballs to thesource_cachedirectory defined inconfig.yamland then manually compiled both and installed both to the location defined asinstall_tree:root:inconfig.yaml. - Spack has automatically patched in PLUMED support as requested

Let's now return to our spack find command:

spack find output

$ spack -C ${HOME}/spack-config-templates/0.23.1 find -x -p -v gromacs

-- linux-rocky9-x86_64_v4 / gcc@12.2.0 --------------------------

gromacs@2024.1~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only~mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-yekd62f-serial

gromacs@2024.1~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-openmpi-5.0.3-55bdvpa-mpi

gromacs@2024.1~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-openmpi-5.0.3-tzp3elt-mpi

gromacs@2024.3~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~nvshmem~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.3-openmpi-5.0.3-4tmxysw-mpi

-- linux-rocky9-x86_64_v4 / gcc@14.2.0 --------------------------

gromacs@2023~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp+plumed~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2023-openmpi-5.0.5-2wld7jf

gromacs@2024.3~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only~mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2024.3-uhqvqaj-serial

gromacs@2024.3~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2024.3-openmpi-5.0.5-q4j2vts-mpi

==> 7 installed packages

So now we have Gromacs 2023 with PLUMED support (+plumed) installed

personally, alongside the other centrally installed versions of Gromacs.

If we now add our specified private module path using module use as specified

in modules.yaml:

module use /data/scratch/${USER}/spack/privatemodules/linux-rocky9-x86_64_v4

Then we should see our personal version available for use:

Output from module avail

$ module avail -l gromacs

- Package/Alias -----------------------.- Versions --------.- Last mod. -------

/data/scratch/abc123/spack/privatemodules/linux-rocky9-x86_64_v4:

gromacs-mpi/2023-openmpi-5.0.5-gcc-14.2.0-plumed 2025/05/14 10:46:26

/share/apps/rocky9/environmentmodules/apocrita-modules/spack:

gromacs-gpu/2024.1-openmpi-5.0.3-cuda-12.4.0-gcc-12.2.0 2025/05/01 10:40:43

gromacs-gpu/2024.3-openmpi-5.0.3-cuda-12.6.2-gcc-12.2.0 2025/05/12 14:47:25

gromacs-mpi/2024.1-openmpi-5.0.3-gcc-12.2.0 2025/05/01 10:40:43

gromacs-mpi/2024.3-openmpi-5.0.5-gcc-14.2.0 2025/05/08 14:48:07

gromacs/2024.1-gcc-12.2.0 2025/05/01 10:40:44

gromacs/2024.3-gcc-14.2.0 2025/05/08 14:48:07

And indeed, we can load and use our personal version (remember to use the full module name):

Output from module load (click to expand)

$ module load gromacs-mpi/2023-openmpi-5.0.5-gcc-14.2.0-plumed

Loading gromacs-mpi/2023-openmpi-5.0.5-gcc-14.2.0-plumed

Loading requirement: openmpi/5.0.5-gcc-14.2.0 gsl/2.7.1-gcc-12.2.0

$ gmx_mpi --version

:-) GROMACS - gmx_mpi, 2023-PLUMED_spack (-:

Executable: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2023-openmpi-5.0.5-2wld7jf/bin/gmx_mpi

Data prefix: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2023-openmpi-5.0.5-2wld7jf

Working dir: /data/home/abc123

Command line:

gmx_mpi --version

GROMACS version: 2023-PLUMED_spack

Precision: mixed

Memory model: 64 bit

MPI library: MPI

OpenMP support: enabled (GMX_OPENMP_MAX_THREADS = 128)

GPU support: disabled

SIMD instructions: AVX_512

CPU FFT library: fftw-3.3.10-sse2-avx-avx2-avx2_128-avx512

GPU FFT library: none

Multi-GPU FFT: none

RDTSCP usage: enabled

TNG support: enabled

Hwloc support: hwloc-2.9.1

Tracing support: disabled

C compiler: /share/apps/rocky9/general/apps/spack/0.23.1/lib/spack/env/gcc/gcc GNU 14.2.0

C compiler flags: -fexcess-precision=fast -funroll-all-loops -mavx512f -mfma -mavx512vl -mavx512dq -mavx512bw -Wno-missing-field-initializers -O3 -DNDEBUG

C++ compiler: /share/apps/rocky9/general/apps/spack/0.23.1/lib/spack/env/gcc/g++ GNU 14.2.0

C++ compiler flags: -fexcess-precision=fast -funroll-all-loops -mavx512f -mfma -mavx512vl -mavx512dq -mavx512bw -Wno-missing-field-initializers -Wno-cast-function-type-strict SHELL:-fopenmp -O3 -DNDEBUG

BLAS library: External - user-supplied

LAPACK library: External - user-supplied

Add CUDA support¶

How about if we wanted Gromacs with PLUMED support but also GPU support via

CUDA? It's possible, but you need to specify the right variant. Remember,

Spack will install the default variant unless explicitly told otherwise. If you

look again at the variants for Gromacs, you

will see that cuda is set to (false) by default. The centrally available

gromacs-gpu module loads a version that has explicitly been compiled with CUDA

support.

Let's see if we can

spec

a personal installation of Gromacs adding in PLUMED support and CUDA:

Specify your cuda_arch

When installing a CUDA variant of a package from Spack, you must also

specify a cuda_arch (sm_70 - Volta V100, sm_80 - Ampere A100,

sm_90 - Hopper H100). Some packages, like Gromacs, let you install one

variant that supports all three at once, others may require a separate

install per cuda_arch required - check the output of the spec command

for more details.

spack spec output (click to expand)

$ spack -C ${HOME}/spack-config-templates/0.23.1 spec gromacs +cuda cuda_arch=70,80,90 +plumed ^openmpi ^fftw+openmp

- gromacs@2023%gcc@12.2.0~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp+plumed~relaxed_double_precision+shared build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none arch=linux-rocky9-x86_64_v4

[^] ^cmake@3.27.9%gcc@12.2.0~doc+ncurses+ownlibs build_system=generic build_type=Release arch=linux-rocky9-x86_64_v4

[^] ^curl@8.7.1%gcc@12.2.0~gssapi~ldap~libidn2~librtmp~libssh~libssh2+nghttp2 build_system=autotools libs=shared,static tls=openssl arch=linux-rocky9-x86_64_v4

[^] ^nghttp2@1.57.0%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^openssl@3.3.0%gcc@12.2.0~docs+shared build_system=generic certs=mozilla arch=linux-rocky9-x86_64_v4

[^] ^ca-certificates-mozilla@2023-05-30%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[e] ^glibc@2.34%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^ncurses@6.5%gcc@11.4.1~symlinks+termlib abi=none build_system=autotools patches=7a351bc arch=linux-rocky9-x86_64_v4

[^] ^zlib-ng@2.1.6%gcc@11.4.1+compat+new_strategies+opt+pic+shared build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^cuda@12.6.2%gcc@12.2.0~allow-unsupported-compilers~dev build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^libxml2@2.10.3%gcc@11.4.1+pic~python+shared build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^xz@5.4.6%gcc@11.4.1~pic build_system=autotools libs=shared,static arch=linux-rocky9-x86_64_v4

[^] ^fftw@3.3.10%gcc@12.2.0+mpi+openmp~pfft_patches+shared build_system=autotools precision=double,float arch=linux-rocky9-x86_64_v4

[^] ^gcc-runtime@12.2.0%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[e] ^glibc@2.34%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^gmake@4.4.1%gcc@11.4.1~guile build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^gcc-runtime@11.4.1%gcc@11.4.1 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^hwloc@2.9.1%gcc@12.2.0~cairo~cuda~gl~libudev+libxml2~netloc~nvml~oneapi-level-zero~opencl+pci~rocm build_system=autotools libs=shared,static arch=linux-rocky9-x86_64_v4

[^] ^libpciaccess@0.17%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^util-macros@1.19.3%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^openblas@0.3.26%gcc@12.2.0~bignuma~consistent_fpcsr+dynamic_dispatch+fortran~ilp64+locking+pic+shared build_system=makefile symbol_suffix=none threads=none arch=linux-rocky9-x86_64_v4

[^] ^perl@5.38.0%gcc@11.4.1+cpanm+opcode+open+shared+threads build_system=generic patches=714e4d1 arch=linux-rocky9-x86_64_v4

[^] ^berkeley-db@18.1.40%gcc@11.4.1+cxx~docs+stl build_system=autotools patches=26090f4,b231fcc arch=linux-rocky9-x86_64_v4

[^] ^bzip2@1.0.8%gcc@11.4.1~debug~pic+shared build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^gdbm@1.23%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^readline@8.2%gcc@11.4.1 build_system=autotools patches=bbf97f1 arch=linux-rocky9-x86_64_v4

[e] ^openmpi@5.0.3%gcc@12.2.0~atomics~cuda+gpfs~internal-hwloc~internal-libevent~internal-pmix~java~legacylaunchers~lustre~memchecker~openshmem~orterunprefix~romio+rsh~static+vt+wrapper-rpath build_system=autotools fabrics=none romio-filesystem=none schedulers=none arch=linux-rocky9-x86_64_v4

[^] ^pkgconf@2.2.0%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

- ^plumed@2.9.1%gcc@12.2.0+gsl+mpi+shared arrayfire=none build_system=autotools optional_modules=all arch=linux-rocky9-x86_64_v4

[^] ^autoconf@2.72%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^automake@1.16.5%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^gsl@2.7.1%gcc@12.2.0~external-cblas+pic+shared build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libtool@2.4.7%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^findutils@4.9.0%gcc@11.4.1 build_system=autotools patches=440b954 arch=linux-rocky9-x86_64_v4

[^] ^m4@1.4.19%gcc@11.4.1+sigsegv build_system=autotools patches=9dc5fbd,bfdffa7 arch=linux-rocky9-x86_64_v4

[^] ^diffutils@3.10%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libsigsegv@2.14%gcc@11.4.1 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^py-cython@3.0.8%gcc@12.2.0 build_system=python_pip arch=linux-rocky9-x86_64_v4

[^] ^py-pip@23.1.2%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^py-setuptools@69.2.0%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^py-wheel@0.41.2%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

[^] ^python@3.11.7%gcc@12.2.0+bz2+crypt+ctypes+dbm~debug+libxml2+lzma~nis~optimizations+pic+pyexpat+pythoncmd+readline+shared+sqlite3+ssl~tkinter+uuid+zlib build_system=generic patches=13fa8bf,b0615b2,ebdca64,f2fd060 arch=linux-rocky9-x86_64_v4

[^] ^expat@2.6.2%gcc@12.2.0+libbsd build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libbsd@0.12.1%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libmd@1.0.4%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^gettext@0.22.5%gcc@11.4.1+bzip2+curses+git~libunistring+libxml2+pic+shared+tar+xz build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^tar@1.34%gcc@11.4.1 build_system=autotools zip=pigz arch=linux-rocky9-x86_64_v4

[^] ^pigz@2.8%gcc@11.4.1 build_system=makefile arch=linux-rocky9-x86_64_v4

[^] ^zstd@1.5.6%gcc@11.4.1+programs build_system=makefile compression=none libs=shared,static arch=linux-rocky9-x86_64_v4

[^] ^libffi@3.4.6%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^libxcrypt@4.4.35%gcc@12.2.0~obsolete_api build_system=autotools patches=4885da3 arch=linux-rocky9-x86_64_v4

[^] ^sqlite@3.43.2%gcc@12.2.0+column_metadata+dynamic_extensions+fts~functions+rtree build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^util-linux-uuid@2.38.1%gcc@12.2.0 build_system=autotools arch=linux-rocky9-x86_64_v4

[^] ^python-venv@1.0%gcc@12.2.0 build_system=generic arch=linux-rocky9-x86_64_v4

So a similar output to the PLUMED variant above, but adding in CUDA (which comes

from upstream). Almost everything required to compile Gromacs is already

available in our central upstream ([^]), including cuda.

We need to compile an additional new version of PLUMED using GCC 12.2.0. This is because the most recent version of CUDA available from Spack v0.23.1 is CUDA 12.6.2, and the newest GCC compiler supported by CUDA 12.6.2 is GCC 13.2.

Once we have the version of PLUMED, Gromacs itself should compile with PLUMED

and CUDA support. You should notice that the variant list for gromacs contains

+cuda, +plumed and cuda_arch=70,80,90 as well. So, as before, let's go

ahead and install our personal variant:

spack install output (click to expand)

$ spack -C ${HOME}/spack-config-templates/0.23.1 install -j ${NSLOTS} gromacs +cuda cuda_arch=70,80,90 +plumed ^openmpi ^fftw+openmp

[+] /usr (external glibc-2.34-xri56vcyzs7kkvakhoku3fefc46nw25y)

[+] /usr (external glibc-2.34-q2367nemoxqj6j3fg54cg4mjlkvc7f5n)

[+] /share/apps/rocky9/general/libs/openmpi/gcc/12.2.0/5.0.3 (external openmpi-5.0.3-f6rfqovydeohhtdzs6yl2qiro2hxltpm)

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gcc-runtime/11.4.1-llid4hw

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gcc-runtime/12.2.0-w77gg5r

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/berkeley-db/18.1.40-cogxxtx

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gmake/4.4.1-xchit5a

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gsl/2.7.1-ynxvfrc

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/xz/5.4.6-rwn7pno

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/fftw/3.3.10-openmpi-5.0.3-3ptgblf

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/zlib-ng/2.1.6-g2yruc3

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/findutils/4.9.0-62lolye

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/openblas/0.3.26-nt553wl

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/pkgconf/2.2.0-7wg26bz

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libmd/1.0.4-y6ppafl

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libxcrypt/4.4.35-4mszlpj

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/zstd/1.5.6-my7tyw6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libffi/3.4.6-yc4oqfz

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/libsigsegv/2.14-m2gvtuy

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/bzip2/1.0.8-uj4wyhx

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/openssl/3.3.0-filwsx6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/pigz/2.8-somkvv4

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/libtool/2.4.7-c43op4r

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/nghttp2/1.57.0-4ntqcoo

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/libxml2/2.10.3-q6zmsq6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/util-linux-uuid/2.38.1-nkfrifq

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/ncurses/6.5-4n2uzha

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libbsd/0.12.1-zb23l3j

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/m4/1.4.19-fdljdyg

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/tar/1.34-ivzmnos

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/libpciaccess/0.17-tpopwgn

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/curl/8.7.1-v4za2y6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/cuda/12.6.2-7ahvfr4

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/readline/8.2-ea7drzj

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/expat/2.6.2-je4ggnn

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gettext/0.22.5-udcuonu

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/hwloc/2.9.1-zqnfdoq

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/cmake/3.27.9-o3vtj26

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/sqlite/3.43.2-t5xgpdh

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/gdbm/1.23-bx77xc6

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/perl/5.38.0-eyk53wh

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/python/3.11.7-rj3pox3

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/autoconf/2.72-h32ralr

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/python-venv/1.0-s6divkp

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-11.4.1/automake/1.16.5-y5sqj65

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/py-setuptools/69.2.0-udom3th

[+] /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/py-cython/3.0.8-zcf7xi7

==> Installing plumed-2.9.1-rx3uoj7klci5wi6swovlwjyuibi4ksif [48/49]

==> No binary for plumed-2.9.1-rx3uoj7klci5wi6swovlwjyuibi4ksif found: installing from source

==> Using cached archive: /data/scratch/abc123/spack/cache/_source-cache/archive/e2/e24563ad1eb657611918e0c978d9c5212340f128b4f1aa5efbd439a0b2e91b58.tar.gz

==> Ran patch() for plumed

==> plumed: Executing phase: 'autoreconf'

==> plumed: Executing phase: 'configure'

==> plumed: Executing phase: 'build'

==> plumed: Executing phase: 'install'

==> plumed: Successfully installed plumed-2.9.1-rx3uoj7klci5wi6swovlwjyuibi4ksif

Stage: 2.76s. Autoreconf: 4.28s. Configure: 20.73s. Build: 16m 17.39s. Install: 43.79s. Post-install: 1.92s. Total: 17m 34.56s

[+] /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/plumed/2.9.1-openmpi-5.0.3-rx3uoj7

==> Installing gromacs-2023-melbrk7vxfl2gcbzd7rghv6yimsn66co [49/49]

==> No binary for gromacs-2023-melbrk7vxfl2gcbzd7rghv6yimsn66co found: installing from source

==> Using cached archive: /data/scratch/abc123/spack/cache/_source-cache/archive/ac/ac92c6da72fbbcca414fd8a8d979e56ecf17c4c1cdabed2da5cfb4e7277b7ba8.tar.gz

NOTE: shell only version, useful when plumed is cross compiled

NOTE: shell only version, useful when plumed is cross compiled

PLUMED patching tool

MD engine: gromacs-2023

PLUMED location: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/plumed/2.9.1-openmpi-5.0.3-rx3uoj7/lib/plumed

diff file: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/plumed/2.9.1-openmpi-5.0.3-rx3uoj7/lib/plumed/patches/gromacs-2023.diff

sourcing config file: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/plumed/2.9.1-openmpi-5.0.3-rx3uoj7/lib/plumed/patches/gromacs-2023.config

Executing plumed_before_patch function

PLUMED can be incorporated into gromacs using the standard patching procedure.

Patching must be done in the gromacs root directory _before_ the cmake command is invoked.

On clusters you may want to patch gromacs using the static version of plumed, in this case

building gromacs can result in multiple errors. One possible solution is to configure gromacs

with these additional options:

cmake -DBUILD_SHARED_LIBS=OFF -DGMX_PREFER_STATIC_LIBS=ON

To enable PLUMED in a gromacs simulation one should use

mdrun with an extra -plumed flag. The flag can be used to

specify the name of the PLUMED input file, e.g.:

gmx mdrun -plumed plumed.dat

For more information on gromacs you should visit http://www.gromacs.org

Linking Plumed.h, Plumed.inc, and Plumed.cmake (shared mode)

Patching with on-the-fly diff from stored originals

patching file ./cmake/gmxVersionInfo.cmake

Hunk #1 FAILED at 257.

1 out of 1 hunk FAILED -- saving rejects to file ./cmake/gmxVersionInfo.cmake.rej

patching file ./src/gromacs/CMakeLists.txt

patching file ./src/gromacs/mdlib/expanded.cpp

patching file ./src/gromacs/mdlib/expanded.h

patching file ./src/gromacs/mdlib/sim_util.cpp

patching file ./src/gromacs/mdrun/legacymdrunoptions.cpp

patching file ./src/gromacs/mdrun/legacymdrunoptions.h

patching file ./src/gromacs/mdrun/md.cpp

patching file ./src/gromacs/mdrun/minimize.cpp

patching file ./src/gromacs/mdrun/replicaexchange.cpp

patching file ./src/gromacs/mdrun/replicaexchange.h

patching file ./src/gromacs/mdrun/rerun.cpp

patching file ./src/gromacs/mdrun/runner.cpp

patching file ./src/gromacs/modularsimulator/expandedensembleelement.cpp

patching file ./src/gromacs/taskassignment/decidegpuusage.cpp

patching file ./src/gromacs/taskassignment/include/gromacs/taskassignment/decidegpuusage.h

PLUMED is compiled with MPI support so you can configure gromacs-2023 with MPI

==> Ran patch() for gromacs

==> gromacs: Executing phase: 'cmake'

==> gromacs: Executing phase: 'build'

==> gromacs: Executing phase: 'install'

==> gromacs: Successfully installed gromacs-2023-melbrk7vxfl2gcbzd7rghv6yimsn66co

Stage: 1.14s. Cmake: 46.60s. Build: 2m 23.80s. Install: 7.72s. Post-install: 1.36s. Total: 3m 23.54s

[+] /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2023-openmpi-5.0.3-melbrk7

To break down what has happened above:

- The existing available upstream dependencies have been used from the central location as opposed to being re-installed personally

- Spack has re-used the cached tarballs that were already pulled for the first PLUMED and Gromacs variants that have already been installed

- Spack has automatically patched in PLUMED and CUDA support as requested

Let's now return to our spack find command:

spack find output

$ spack -C ${HOME}/spack-config-templates/0.23.1 find -x -p -v gromacs

-- linux-rocky9-x86_64_v4 / gcc@12.2.0 --------------------------

gromacs@2023~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp+plumed~relaxed_double_precision+shared build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2023-openmpi-5.0.3-melbrk7

gromacs@2024.1~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only~mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-yekd62f-serial

gromacs@2024.1~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-openmpi-5.0.3-55bdvpa-mpi

gromacs@2024.1~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared~sycl build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.1-openmpi-5.0.3-tzp3elt-mpi

gromacs@2024.3~cp2k+cuda~cufftmp~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~nvshmem~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release cuda_arch=70,80,90 generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2024.3-openmpi-5.0.3-4tmxysw-mpi

-- linux-rocky9-x86_64_v4 / gcc@14.2.0 --------------------------

gromacs@2023~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp+plumed~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2023-openmpi-5.0.5-2wld7jf

gromacs@2024.3~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only~mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2024.3-uhqvqaj-serial

gromacs@2024.3~cp2k~cuda~cycle_subcounters~double+gmxapi+hwloc~intel_provided_gcc~ipo~mdrun_only+mpi+nblib~nosuffix~opencl+openmp~relaxed_double_precision+shared build_system=cmake build_type=Release generator=make openmp_max_threads=none /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-14.2.0/gromacs/2024.3-openmpi-5.0.5-q4j2vts-mpi

So now we have Gromacs 2023 with PLUMED support (+plumed) and Gromacs 2023

with PLUMED (+plumed) and CUDA (+cuda cuda_arch=70,80,90) support

installed personally alongside the other centrally installed versions of

Gromacs.

As long as we still have our specified private module path loaded using

module use:

module use /data/scratch/${USER}/spack/privatemodules/linux-rocky9-x86_64_v4

Then we should see our personal versions available for use:

Output from module avail

$ module avail -l gromacs

- Package/Alias -----------------------.- Versions --------.- Last mod. -------

/data/scratch/abc123/spack/privatemodules/linux-rocky9-x86_64_v4:

gromacs-gpu/2023-openmpi-5.0.3-cuda-12.6.2-gcc-12.2.0-plumed2025/05/14 11:47:27

gromacs-mpi/2023-openmpi-5.0.5-gcc-14.2.0-plumed 2025/05/14 10:46:26

/share/apps/rocky9/environmentmodules/apocrita-modules/spack:

gromacs-gpu/2024.1-openmpi-5.0.3-cuda-12.4.0-gcc-12.2.0 2025/05/01 10:40:43

gromacs-gpu/2024.3-openmpi-5.0.3-cuda-12.6.2-gcc-12.2.0 2025/05/12 14:47:25

gromacs-mpi/2024.1-openmpi-5.0.3-gcc-12.2.0 2025/05/01 10:40:43

gromacs-mpi/2024.3-openmpi-5.0.5-gcc-14.2.0 2025/05/08 14:48:07

gromacs/2024.1-gcc-12.2.0 2025/05/01 10:40:44

gromacs/2024.3-gcc-14.2.0 2025/05/08 14:48:07

And indeed, we can load and use our personal version:

Output from module load (click to expand)

$ module load gromacs-gpu/2023-openmpi-5.0.3-cuda-12.6.2-gcc-12.2.0-plumed

Loading gromacs-gpu/2023-openmpi-5.0.3-cuda-12.6.2-gcc-12.2.0-plumed

Loading requirement: cuda/12.6.2-gcc-12.2.0 openmpi/5.0.3-gcc-12.2.0 gsl/2.7.1-gcc-12.2.0

$ gmx_mpi --version

:-) GROMACS - gmx_mpi, 2023-plumed_2.9.1 (-:

Executable: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2023-openmpi-5.0.3-melbrk7/bin/gmx_mpi

Data prefix: /data/scratch/abc123/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/gromacs/2023-openmpi-5.0.3-melbrk7

Working dir: /data/home/abc123

Command line:

gmx_mpi --version

GROMACS version: 2023-plumed_2.9.1

Precision: mixed

Memory model: 64 bit

MPI library: MPI

OpenMP support: enabled (GMX_OPENMP_MAX_THREADS = 128)

GPU support: CUDA

NB cluster size: 8

SIMD instructions: AVX_512

CPU FFT library: fftw-3.3.10-sse2-avx-avx2-avx2_128-avx512

GPU FFT library: cuFFT

Multi-GPU FFT: none

RDTSCP usage: enabled

TNG support: enabled

Hwloc support: hwloc-2.9.1

Tracing support: disabled

C compiler: /share/apps/rocky9/general/apps/spack/0.23.1/lib/spack/env/gcc/gcc GNU 12.2.0

C compiler flags: -fexcess-precision=fast -funroll-all-loops -mavx512f -mfma -mavx512vl -mavx512dq -mavx512bw -Wno-missing-field-initializers -O3 -DNDEBUG

C++ compiler: /share/apps/rocky9/general/apps/spack/0.23.1/lib/spack/env/gcc/g++ GNU 12.2.0

C++ compiler flags: -fexcess-precision=fast -funroll-all-loops -mavx512f -mfma -mavx512vl -mavx512dq -mavx512bw -Wno-missing-field-initializers -Wno-cast-function-type-strict SHELL:-fopenmp -O3 -DNDEBUG

BLAS library: External - user-supplied

LAPACK library: External - user-supplied

CUDA compiler: /share/apps/rocky9/spack/apps/linux-rocky9-x86_64_v4/gcc-12.2.0/cuda/12.6.2-7ahvfr4/bin/nvcc nvcc: NVIDIA (R) Cuda compiler driver;Copyright (c) 2005-2024 NVIDIA Corporation;Built on Thu_Sep_12_02:18:05_PDT_2024;Cuda compilation tools, release 12.6, V12.6.77;Build cuda_12.6.r12.6/compiler.34841621_0

CUDA compiler flags:-std=c++17;--generate-code=arch=compute_70,code=sm_70;--generate-code=arch=compute_80,code=sm_80;--generate-code=arch=compute_90,code=sm_90;-use_fast_math;-Xptxas;-warn-double-usage;-Xptxas;-Werror;-D_FORCE_INLINES;-fexcess-precision=fast -funroll-all-loops -mavx512f -mfma -mavx512vl -mavx512dq -mavx512bw -Wno-missing-field-initializers -Wno-cast-function-type-strict SHELL:-fopenmp -O3 -DNDEBUG

CUDA driver: 0.0

CUDA runtime: 12.60

So, as you can see, we have both plumed and CUDA details listed.

Summary¶

The above examples show just two of the many possible variants of Gromacs that

you can compile yourself. To compile additional variants, change the required

parameters to (true) with + or (false) with ~ in spec and install

commands, and remember Spack will always follow the defaults unless explicitly

told otherwise.

You could, for example, spec and install a much more complex variant such

as:

gromacs +cuda cuda_arch=70,80,90 +cufftmp +double +plumed ^openmpi ^fftw+openmp

which would compile with CUDA, multi-GPU FFT support with cuFFTMp, produce a double precision version of the executables and also add PLUMED support.

Or you could use:

gromacs ~mpi +plumed ^fftw~mpi

Which would compile a serial version of Gromacs with the gmx (as opposed to

gmx_mpi) binary (much like our central gromacs/2024.1-gcc-12.2.0 module for

example) but with PLUMED support added.

The more complex the variant, the less is likely to be already in upstream, so bear this in mind, as compilation and installation time may increase as well.